Crawling Vs Scraping

Content

What Is The Difference Between Web-crawling And Web-scraping? [Duplicate]

Crawling is used for data extraction from search engines like google and yahoo and e-commerce web sites and afterward, you filter out unnecessary info and choose solely the one you require by scraping it. It might sound the same, however, there are some key differences between scraping vs. crawling. Both scraping and crawling go hand in hand in the whole process of knowledge gathering, so usually, when one is finished, the opposite follows.

Web Scraping Vs Web Crawling: What’s The Difference?

Conveniently, Python additionally has robust assist for knowledge manipulation once the web information has been extracted. R is another good choice for small to medium scale publish-scraping data processing. While web scraping can be used for many good functions, dangerous actors can also develop malicious bots that cause harm to web site homeowners and others. Professional internet scrapers must at all times ensure that they stay throughout the bounds of what's found to be typically acceptable by the broader online community. Web scrapers have to be positive not to overload web sites ways that may disrupt the normal operation of that website.

In This Article, Read An Explanation Of The Differences Between Web Scraping And Web Crawling.

Further steps usually have to be taken to scrub, remodel, and aggregate the information before it may be delivered to the top-user or application. Finally, the information may be summarized at a better degree of detail, to point out common prices across a class, for instance. Generally, it is carried out as a much more environment friendly alternative to manually collecting information because it allows much more knowledge to be collected at a decrease price and in a shorter period of time.

What Is Web Scraping?

This is then integrated into one location for ease of reference and evaluation. You can also get info on how prospects view your company from buyer evaluations and suggestions from blogs and social media platforms. While some internet Free Email Address Scraper & Best Email Scraper scraping instruments use a minimal UI and a command line, others boast a full-fledged UI the place the person simply clicks on the information requiring scraping. You can use Octoparse to rip an internet site with its in depth functionalities and capabilities. It has 2 sorts of operation mode- Task Template Modeand Advanced Mode - for non-programmers to shortly pick up. The user-pleasant point-and-click on interface can guild you through the whole extraction process. As a result, you can pull web site content material simply and put it aside into structured codecs like EXCEL, TXT, HTML or your databases in a short time body.

How Does Web Scraping Work?

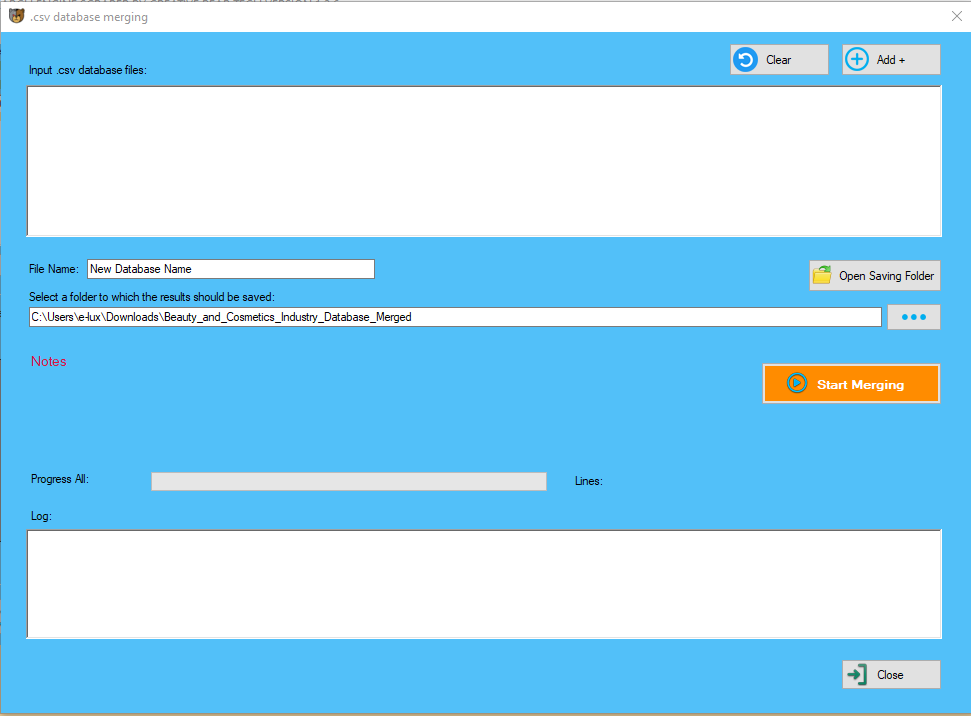

You can always write straight into SQLite or some other database of your alternative when you're implementing a DIY solution. If you want to see your net scraping ideas come to life with no hitch, use a web scraping service. A mark of some of the finest net scraping companies is gathering knowledge with compliance to the web site's terms of use and privateness coverage. If you're an worker or a pupil of a government-accredited establishment and work on a analysis project, FindDataLab will grant you internet scraping services equal to 1000jQuery. Web crawling, which is finished by an internet crawler or a spider is the first step of scraping websites. This is the step the place our net scraping software will visit the web page we have to scrape; then it's going to continue to precise internet scraping, after which "crawl" to the subsequent page. It's turn into more convenient to blanket the whole process of information acquisition by using simply the time period web scraping, when, actually, a number of steps are necessary to collect usable knowledge. Essentially, the method of automated internet scraping consists of website crawling, data scraping, knowledge extraction and information wrangling. At the end of this course of, we must always end up with knowledge that's ready for evaluation. Websites don’t like such aggressive crawling and scraping of the information at such a fast clip. To expedite the processing of scraping the info, your scraper may make too frequent requests and slow down or deliver down the server. Web scraping can relieve the burden of hunting for data as it could make all of it out there in a single place. Especially, if you're trying to scale your web scraping project. Therefore, a sound query is why not pay from the start and get a completely personalized product with anti-blocking precautions already implemented, and release your precious time? Usually, it's attainable to increase the variety of tasks and pages scraped by shopping for a subscription or paying a one-time set payment. If your project requires some kind of information enrichment or dataset merging, you will have to take extra steps so as to make it occur. This might presumably be one other time sink, depending on the complexity of your web scraping project. However, dealing with large volumes of knowledge in a scalable manner could be tricky in web scraping. There are also various instruments that let you level and get data, however they are not usually very simple to use or have very poor data high quality. In order to save time and not get stunned by the unknown unknowns, contemplate hiring an online scraping service similar to FindDataLab. As professionals with hands-on experience in realizing tasks of various problem, FindDataLab can find the solution to nearly any net scraping drawback. Don't hesitate to contact us and we'll get back to you shortly. In addition to randomising your request charges, you must also be sure that the server doesn't suppose that one IP address is randomly browsing 10'000 pages of an internet site. IP handle blacklisting is among the most basic anti-internet scraping measures that a server can implement. Usually it occurs if many requests are sent one after the other with little time in between. First, we'd like to consider net scraper request delays, as well as request randomization. These issues are pretty easy to implement in case you have a custom web scraping script.

You can always write straight into SQLite or some other database of your alternative when you're implementing a DIY solution. If you want to see your net scraping ideas come to life with no hitch, use a web scraping service. A mark of some of the finest net scraping companies is gathering knowledge with compliance to the web site's terms of use and privateness coverage. If you're an worker or a pupil of a government-accredited establishment and work on a analysis project, FindDataLab will grant you internet scraping services equal to 1000jQuery. Web crawling, which is finished by an internet crawler or a spider is the first step of scraping websites. This is the step the place our net scraping software will visit the web page we have to scrape; then it's going to continue to precise internet scraping, after which "crawl" to the subsequent page. It's turn into more convenient to blanket the whole process of information acquisition by using simply the time period web scraping, when, actually, a number of steps are necessary to collect usable knowledge. Essentially, the method of automated internet scraping consists of website crawling, data scraping, knowledge extraction and information wrangling. At the end of this course of, we must always end up with knowledge that's ready for evaluation. Websites don’t like such aggressive crawling and scraping of the information at such a fast clip. To expedite the processing of scraping the info, your scraper may make too frequent requests and slow down or deliver down the server. Web scraping can relieve the burden of hunting for data as it could make all of it out there in a single place. Especially, if you're trying to scale your web scraping project. Therefore, a sound query is why not pay from the start and get a completely personalized product with anti-blocking precautions already implemented, and release your precious time? Usually, it's attainable to increase the variety of tasks and pages scraped by shopping for a subscription or paying a one-time set payment. If your project requires some kind of information enrichment or dataset merging, you will have to take extra steps so as to make it occur. This might presumably be one other time sink, depending on the complexity of your web scraping project. However, dealing with large volumes of knowledge in a scalable manner could be tricky in web scraping. There are also various instruments that let you level and get data, however they are not usually very simple to use or have very poor data high quality. In order to save time and not get stunned by the unknown unknowns, contemplate hiring an online scraping service similar to FindDataLab. As professionals with hands-on experience in realizing tasks of various problem, FindDataLab can find the solution to nearly any net scraping drawback. Don't hesitate to contact us and we'll get back to you shortly. In addition to randomising your request charges, you must also be sure that the server doesn't suppose that one IP address is randomly browsing 10'000 pages of an internet site. IP handle blacklisting is among the most basic anti-internet scraping measures that a server can implement. Usually it occurs if many requests are sent one after the other with little time in between. First, we'd like to consider net scraper request delays, as well as request randomization. These issues are pretty easy to implement in case you have a custom web scraping script.

Massive USA B2B Database of All Industrieshttps://t.co/VsDI7X9hI1 pic.twitter.com/6isrgsxzyV

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

To get knowledge of such scale and complexity, we crawl the supply websites. Hence, internet scraping has turn out to be an necessary need of the hour for the companies which require a considerable amount of data. The European Commission mandates the banks to create devoted interfaces (APIs) and prohibits the usage of the Screen Scraping method from September 2019.

ash your Hands and Stay Safe during Coronavirus (COVID-19) Pandemic - JustCBD https://t.co/XgTq2H2ag3 @JustCbd pic.twitter.com/4l99HPbq5y

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

As for scraping, there are many completely different instruments on the market, referred to as scrapers. Which one you need to use is dependent upon what your most well-liked scraping methods are. If you need to obtain the data gathered, you’d need to go for internet scraping instead. APIs tend to get updated very slowly because they are usually on the bottom of the priority list. Instead, if you scrape the content off the website, you get what you see. There is more accountability on easier tests could be done on the data collected by way of internet scraping as it can be easily in contrast with what you actually see on the location. Every system you come throughout right now has an API already developed for his or her prospects or it is at least of their bucket listing. While APIs are great if you actually need to interact with the system however if you're only trying to extract information from the website, net scraping is a much better option. In the complete-time case, devoted net scrapers may be responsible for maintaining infrastructure, constructing initiatives, and monitoring their efficiency. Though most skilled web scrapers fall into the first category, the number of full-time web scrapers is rising. Despite this acceleration in progress over the previous 5 years, internet scraping stays a nascent business. While web crawling by search engines like google has been completely institutionalized, net scraping remains seen as more of a "hacker" skill, often practiced by particular person builders in their free time. Any enterprise looking to extract useful data from the web will find it simpler to take action utilizing web scraping tools. By filling on this form you agree with Oxylabs.io to course of your private information. Provided information might be processed with the aim of administering your inquiry, informing you about our providers and presenting you with the most effective proxy solutions. We found their unparalleled help in keeping us move forward and the results have been great. We are pleased to have found their expert services and might be in search of their support in different future initiatives. Real-time internet scraping API that opens a wide stream of data generation, exclusively helpful for ecommerce, and fintech. Reliable enterprise-grade net scraping companies that autopilot the conversion of hundreds of thousands of webpages into a large and accessible info pool. Scrapy is an internet crawling framework that comes with a good number of tools to make internet crawling and scraping simple. Twisted is an asynchronous networking framework that follows non-blocking I/O calls to servers. Because it's multithreaded and non-blocking, it is actually the best when it comes to performance and actually the fastest among the many three instruments. One benefit of Scrapy over the three instruments is that it comes with modules to send requests as well as to parse responses. This article will be used to debate the 3 popular instruments and provide an entire explanation about them. Crawling would be primarily what Google, Yahoo, MSN, and so on. do, in search of ANY data. Scraping is mostly targeted at sure websites, for specfic information, e.g. for price comparability, so are coded quite in a different way. Helium Scraper is a visible net information crawling software program that works pretty nicely when the affiliation between components is small. And users can get access to online templates based for numerous crawling wants. Octoparse is a robust website crawler for extracting almost all kinds of knowledge you need on the websites.

JustCBD CBD Gummies - CBD Gummy Bears https://t.co/9pcBX0WXfo @JustCbd pic.twitter.com/7jPEiCqlXz

— Creative Bear Tech (@CreativeBearTec) April 27, 2020

- The net scraping device vary begins from ready-made internet scraping software to DIY scripting with internet scraping providers as a custom-made answer in between.

- Website scraping instruments may be very versatile if you know the way to make use of them.

- Usually developing with web scraping ideas isn't too complicated.

- The most challenging half is finding a way to combination the info.

From what you will learn, you will know which of the tool to use relying on your ability and particular person project necessities. If you are not actually conversant with Web Scraping, I will advise you to learn our article on guide to internet scraping – additionally try our tutorial on the way to construct a simple internet scraper using Python. If you want to maintain abreast of business tendencies, then internet scraping tools may be of much assist. They are highly effective market research instruments that acquire information from varied knowledge suppliers and analysis firms. You can, in fact, extract financial statements and all the traditional information from the web sites in a much simpler and sooner way through web scraping. Scrapy is a high-high quality net crawling and scraping framework which is extensively used for crawling websites. It can be used for a variety of functions corresponding to data mining, knowledge monitoring, and automated testing. If you are conversant in Python, you would discover Scrapy fairly simple to get on with. Data high quality assurance and timely upkeep are integral to operating a web crawling setup. At answer providers like PromptCloud, they take finish-to-finish possession of these aspects. And it's much more difficult to show the unstructured data within Generate Leads for Sales Teams the net into perfectly structured and clean machine-readable knowledge. The high quality of knowledge is one thing many companies like PromptCloud take delight in. The more the demand for the information, the extra advanced it turns into to crawl it. Very few folks have talked about this earlier than when comparing internet scraping tools. Think about why individuals like to use Wordpress to construct CMS instead of other frameworks, the key is ecosystem. So many themes, plugins may help individuals shortly construct a CMS which meet the requirement. The two Python web scraping frameworks are created to do different jobs. Selenium is only used to automate web browser interplay, Scrapy is used to download HTML, course of data and reserve it. Of course, you can always look for a selected net scraping software solution that provides you the file format you need, however what happens when your project requires a special output file format? A great spot to begin can be to implement a DIY solution and turn to internet scraping companies for scaling. This method Search Engine Harvester you have some hands-on experience and have realistic expectations of what you'll get out of your internet scraper. As previously talked about, the kinds of outputs could be Excel sheets and CSV files. This applies largely to utilizing net scraping providers, since the output must be transportable. Still, the trade is slowly becoming more institutionalized evidenced by the expansion in company net scraping departments and publication of net scraping requirements by various organizations. The first challenge in internet scraping is understanding what is possible and figuring out what knowledge to collect. This is the place an experienced net scraper has a significant benefit over a novice one. Still, as soon as the information has been recognized, many challenges remain. Many forms of software and programming languages can be used to execute these post-scraping duties.

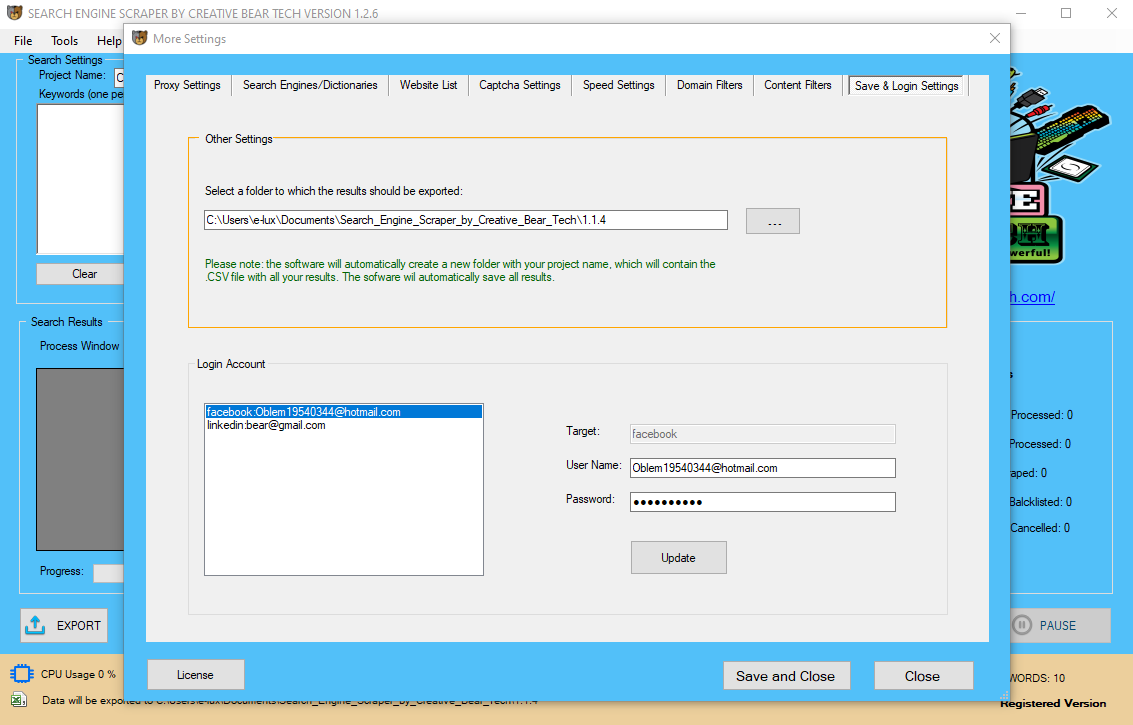

Search Engine Scraper and Email Extractor by Creative Bear Tech. Scrape Google Maps, Google, Bing, LinkedIn, Facebook, Instagram, Yelp and website lists.https://t.co/wQ3PtYVaNv pic.twitter.com/bSZzcyL7w0

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Understandably, you would wish to depend upon a service supplier to extract internet knowledge for you. With years of experience within the net knowledge extraction space, Promptcloud is now in a position to take up internet scraping projects of any complexity and scale. Web scraping (generally referred to as net data extraction) is extra of a focused course of. Equity analysis used to be limited to studying monetary statements of a company and accordingly investing in shares. Now, every information item, data point, and measures of sentiment are important in identifying the right stock and its current development. It might help you fetch all the data aggregation related to the market and enable you to have a look at the massive picture. This is seconded by FCA — the UK regulator who thinks data sharing should happen over dedicated bank APIs and subsequently, mustn't require Screen Scraping by service suppliers. Your bank ensures service suppliers can entry only the information you decide and just for the time interval you resolve. You will be able to discontinue or cancel permission by way of the bank app or website. You and your bank can management the identities of services that access your information. Challenges while utilizing a software- The drawback changes when you add in few more web sites, not all that easy, and have many more fields to gather. For example, you need to use the python Time module's sleep function to trip your requests. With the freemium or free-to-paid net scraping software model, you'll still have to pay eventually. Moreover, whereas knowledge is anywhere out there on websites, it's not out there in a usable format. Web scraping can extract the info in a format of your choice like Excel to be able to course of it and use it the way in which you need. The major drawback associated with Scrapy is that it is not a newbie-centric software. However, I had to drop the idea after I found it's not beginner-pleasant. One major setback of Scrapy is that it doesn't render JavaScript; you have to send Ajax requests to get knowledge hidden behind JavaScript events or use a 3rd-celebration device similar to Selenium. You can DIY the whole thing, come to a web scraping service supplier with a half-baked dataset or prepare the entire project to be carried out by professionals. As previously mentioned, when using an information scraping service, you need to specify the output file format with the information body's configuration. You have to think about what you need to do subsequent with the info. If you need a simple Word file with addresses of restaurants in a 30-mile radius of your workplace, then this doesn't apply to you. But, if you want to perform exploratory and different kinds of statistical analyses on your knowledge, you have to think about the output's structure. Scalability – Most of those vendors design their platform to scale with as many customers and sources as possible. As lengthy as such design choices are incorporated, scale just isn't a difficulty and any type of requirements could be dealt with. We have had shoppers who tried running a scraping tool for a whole day to extract knowledge from an enormous web site and their laptops died. There are many frameworks available to you to start out with your individual small tasks.

It is with the above-mentioned facts about web information extraction or internet scraping. Outsourcing fully managed custom-made solution suppliers like PromptCloud is the higher option. Web scraping requires a staff of gifted developers to setup and deploy the crawlers on optimized servers for the extraction. But, the core focus of many businesses in need of such knowledge needs to give attention to specializing in information extraction as they've their core focus. It’s common whenever you click on every field to be captured from every website on your record after which be irritated by surprises after you could have submitted your request. Worse although, generally the crawls would have progressed to ninety nine% and failed thereafter leaving you in a wonderland. So you shoot a query to the software’s assist middle and wait to hear something like the location blocked their bots. Web scraping is a broadly recognized term nowadays; not simply because so much data exists around us, however extra as a result of there’s already so much being done with that data. Let’s attempt to analyze the variations between opting for a software that comes with DIY elements over picking a hosted data acquisition resolution on a vendor’s stack. It allows users to load data from raw knowledge sources, apply transformations, and output the info to be read by other applications. Alteryx may be a part of net scraping infrastructure but doesn't perform the precise extraction of information from websites. Many jobs require web scraping abilities, and many individuals are employed as full-time net scrapers. In the former case, programmers or research analysts with separate main duties become responsible for a collection of web scraping duties.

Exercising and Running Outside during Covid-19 (Coronavirus) Lockdown with CBD Oil Tinctures https://t.co/ZcOGpdHQa0 @JustCbd pic.twitter.com/emZMsrbrCk

— Creative Bear Tech (@CreativeBearTec) May 14, 2020

We focus on a few of the advantages of net crawling over use of an API. We talk more in regards to the robots.txt file and identifying yourself in the section net scraping politely, additionally make certain to take a look at FindDataLab's ultimate information to moral net scraping. Regardless of the device you are utilizing, you need to determine yourself when web scraping so as to improve the probabilities of not ending up in a lawsuit. Not just the DIY tools, however even the in-house groups struggle a lot when the complexity of the web sites increases. Many sites are adopting AJAX-based mostly infinite scrolling to improve the user experience. We are in an information-centric world, where data is the most highly effective commodity of all. We use a considerable amount of data for varied purposes like machine learning, knowledge mining, market research, monetary research, and so on. Excel offers the bottom studying curve but is restricted in its capabilities and scalability. R and Python are open-supply programming languages that require programming abilities but are nearly limitless in their ability to control information. Large scale applications could require more superior methods leveraging languages corresponding to Scala and more advanced hardware architectures. The actual extraction of information from web sites is normally simply the first step in an internet scraping project.  When net scraping, we need to think about a couple of things from a authorized standpoint. Obtaining written consent from the website online's owner, information's mental owner or crawling the web site in accordance to its Terms of Service. Some ready-made net scraping software tools present the person with an IP handle rotation choice as properly, so ensure to verify for this selection when deciding on the instruments for your internet scraping project. Luckily, there are quite a few scraper APIs readily made and supported by a proxy infrastructure maintained remotely by professional developer groups. We’ll go over this step by step to grasp this web scraper APIs, so let’s get began. If you are involved in regards to the European Union's 2018 General Data Protection Regulation (GDPR), you have to pay attention to what kinds of knowledge you need to gather. In a nutshell, the GDPR puts limits on what companies can do with individuals's personal identification knowledge similar to name, last name, their house handle, phone quantity and e-mail. The GDPR in itself does not declare web scraping as an illegal follow, as a substitute it states that establishments desirous to scrape people's information need the particular person's in question explicit consent. IP tackle rotation is likely one of the unblockable internet scraper cornerstones. Maintaining the info quality needs constant effort and close monitoring utilizing both guide and automatic layers. This is as a result of websites change their constructions fairly frequently which could render the crawler faulty or convey it to a halt, each of which can have an effect on the output knowledge. You can withdraw your consent to course of personal information at any time. For more data in your rights and data processing, please learn our Privacy Policy. It’s important to understand the main internet crawling vs. internet scraping variations, but also, typically, crawling goes hand in hand with scraping. When internet crawling, you obtain available info online.

When net scraping, we need to think about a couple of things from a authorized standpoint. Obtaining written consent from the website online's owner, information's mental owner or crawling the web site in accordance to its Terms of Service. Some ready-made net scraping software tools present the person with an IP handle rotation choice as properly, so ensure to verify for this selection when deciding on the instruments for your internet scraping project. Luckily, there are quite a few scraper APIs readily made and supported by a proxy infrastructure maintained remotely by professional developer groups. We’ll go over this step by step to grasp this web scraper APIs, so let’s get began. If you are involved in regards to the European Union's 2018 General Data Protection Regulation (GDPR), you have to pay attention to what kinds of knowledge you need to gather. In a nutshell, the GDPR puts limits on what companies can do with individuals's personal identification knowledge similar to name, last name, their house handle, phone quantity and e-mail. The GDPR in itself does not declare web scraping as an illegal follow, as a substitute it states that establishments desirous to scrape people's information need the particular person's in question explicit consent. IP tackle rotation is likely one of the unblockable internet scraper cornerstones. Maintaining the info quality needs constant effort and close monitoring utilizing both guide and automatic layers. This is as a result of websites change their constructions fairly frequently which could render the crawler faulty or convey it to a halt, each of which can have an effect on the output knowledge. You can withdraw your consent to course of personal information at any time. For more data in your rights and data processing, please learn our Privacy Policy. It’s important to understand the main internet crawling vs. internet scraping variations, but also, typically, crawling goes hand in hand with scraping. When internet crawling, you obtain available info online.